Here we compute the evoked from raw for the auditory Brainstorm tutorial dataset. For comparison, see [1] and:

Experiment:

- One subject, 2 acquisition runs 6 minutes each.

- Each run contains 200 regular beeps and 40 easy deviant beeps.

- Random ISI: between 0.7s and 1.7s seconds, uniformly distributed.

- Button pressed when detecting a deviant with the right index finger.

The specifications of this dataset were discussed initially on the FieldTrip bug tracker.

| [1] | Tadel F, Baillet S, Mosher JC, Pantazis D, Leahy RM. Brainstorm: A User-Friendly Application for MEG/EEG Analysis. Computational Intelligence and Neuroscience, vol. 2011, Article ID 879716, 13 pages, 2011. doi:10.1155/2011/879716 |

# Authors: Mainak Jas <mainak.jas@telecom-paristech.fr>

# Eric Larson <larson.eric.d@gmail.com>

# Jaakko Leppakangas <jaeilepp@student.jyu.fi>

#

# License: BSD (3-clause)

import os.path as op

import pandas as pd

import numpy as np

import mne

from mne import combine_evoked

from mne.minimum_norm import apply_inverse

from mne.datasets.brainstorm import bst_auditory

from mne.io import read_raw_ctf

from mne.filter import notch_filter, filter_data

print(__doc__)

To reduce memory consumption and running time, some of the steps are

precomputed. To run everything from scratch change this to False. With

use_precomputed = False running time of this script can be several

minutes even on a fast computer.

use_precomputed = True

The data was collected with a CTF 275 system at 2400 Hz and low-pass

filtered at 600 Hz. Here the data and empty room data files are read to

construct instances of mne.io.Raw.

data_path = bst_auditory.data_path()

subject = 'bst_auditory'

subjects_dir = op.join(data_path, 'subjects')

raw_fname1 = op.join(data_path, 'MEG', 'bst_auditory',

'S01_AEF_20131218_01.ds')

raw_fname2 = op.join(data_path, 'MEG', 'bst_auditory',

'S01_AEF_20131218_02.ds')

erm_fname = op.join(data_path, 'MEG', 'bst_auditory',

'S01_Noise_20131218_01.ds')

In the memory saving mode we use preload=False and use the memory

efficient IO which loads the data on demand. However, filtering and some

other functions require the data to be preloaded in the memory.

preload = not use_precomputed

raw = read_raw_ctf(raw_fname1, preload=preload)

n_times_run1 = raw.n_times

mne.io.concatenate_raws([raw, read_raw_ctf(raw_fname2, preload=preload)])

raw_erm = read_raw_ctf(erm_fname, preload=preload)

Out:

ds directory : /home/ubuntu/mne_data/MNE-brainstorm-data/bst_auditory/MEG/bst_auditory/S01_AEF_20131218_01.ds

res4 data read.

hc data read.

Separate EEG position data file read.

Quaternion matching (desired vs. transformed):

2.51 74.26 0.00 mm <-> 2.51 74.26 -0.00 mm (orig : -56.69 50.20 -264.38 mm) diff = 0.000 mm

-2.51 -74.26 0.00 mm <-> -2.51 -74.26 0.00 mm (orig : 50.89 -52.31 -265.88 mm) diff = 0.000 mm

108.63 0.00 0.00 mm <-> 108.63 0.00 -0.00 mm (orig : 67.41 77.68 -239.53 mm) diff = 0.000 mm

Coordinate transformations established.

Reading digitizer points from [u'/home/ubuntu/mne_data/MNE-brainstorm-data/bst_auditory/MEG/bst_auditory/S01_AEF_20131218_01.ds/S01_20131218_01.pos']...

Polhemus data for 3 HPI coils added

Device coordinate locations for 3 HPI coils added

5 extra points added to Polhemus data.

Measurement info composed.

Finding samples for /home/ubuntu/mne_data/MNE-brainstorm-data/bst_auditory/MEG/bst_auditory/S01_AEF_20131218_01.ds/S01_AEF_20131218_01.meg4:

System clock channel is available, checking which samples are valid.

360 x 2400 = 864000 samples from 340 chs

Current compensation grade : 3

ds directory : /home/ubuntu/mne_data/MNE-brainstorm-data/bst_auditory/MEG/bst_auditory/S01_AEF_20131218_02.ds

res4 data read.

hc data read.

Separate EEG position data file read.

Quaternion matching (desired vs. transformed):

2.64 74.60 0.00 mm <-> 2.64 74.60 0.00 mm (orig : -58.07 49.23 -263.11 mm) diff = 0.000 mm

-2.64 -74.60 0.00 mm <-> -2.64 -74.60 0.00 mm (orig : 49.94 -53.82 -265.07 mm) diff = 0.000 mm

108.24 0.00 0.00 mm <-> 108.24 -0.00 0.00 mm (orig : 66.67 76.99 -243.39 mm) diff = 0.000 mm

Coordinate transformations established.

Reading digitizer points from [u'/home/ubuntu/mne_data/MNE-brainstorm-data/bst_auditory/MEG/bst_auditory/S01_AEF_20131218_02.ds/S01_20131218_01.pos']...

Polhemus data for 3 HPI coils added

Device coordinate locations for 3 HPI coils added

5 extra points added to Polhemus data.

Measurement info composed.

Finding samples for /home/ubuntu/mne_data/MNE-brainstorm-data/bst_auditory/MEG/bst_auditory/S01_AEF_20131218_02.ds/S01_AEF_20131218_02.meg4:

System clock channel is available, checking which samples are valid.

360 x 2400 = 864000 samples from 340 chs

Current compensation grade : 3

ds directory : /home/ubuntu/mne_data/MNE-brainstorm-data/bst_auditory/MEG/bst_auditory/S01_Noise_20131218_01.ds

res4 data read.

hc data read.

Separate EEG position data file read.

Quaternion matching (desired vs. transformed):

0.00 80.00 0.00 mm <-> 0.00 80.00 0.00 mm (orig : -56.57 56.57 -270.00 mm) diff = 0.000 mm

0.00 -80.00 0.00 mm <-> 0.00 -80.00 0.00 mm (orig : 56.57 -56.57 -270.00 mm) diff = 0.000 mm

80.00 0.00 0.00 mm <-> 80.00 -0.00 0.00 mm (orig : 56.57 56.57 -270.00 mm) diff = 0.000 mm

Coordinate transformations established.

Polhemus data for 3 HPI coils added

Device coordinate locations for 3 HPI coils added

Measurement info composed.

Finding samples for /home/ubuntu/mne_data/MNE-brainstorm-data/bst_auditory/MEG/bst_auditory/S01_Noise_20131218_01.ds/S01_Noise_20131218_01.meg4:

System clock channel is available, checking which samples are valid.

15 x 4800 = 72000 samples from 301 chs

Current compensation grade : 3

Data channel array consisted of 274 MEG axial gradiometers, 26 MEG reference sensors and 2 EEG electrodes (Cz and Pz). In addition:

- 1 stim channel for marking presentation times for the stimuli

- 1 audio channel for the sent signal

- 1 response channel for recording the button presses

- 1 ECG bipolar

- 2 EOG bipolar (vertical and horizontal)

- 12 head tracking channels

- 20 unused channels

The head tracking channels and the unused channels are marked as misc channels. Here we define the EOG and ECG channels.

raw.set_channel_types({'HEOG': 'eog', 'VEOG': 'eog', 'ECG': 'ecg'})

if not use_precomputed:

# Leave out the two EEG channels for easier computation of forward.

raw.pick_types(meg=True, eeg=False, stim=True, misc=True, eog=True,

ecg=True)

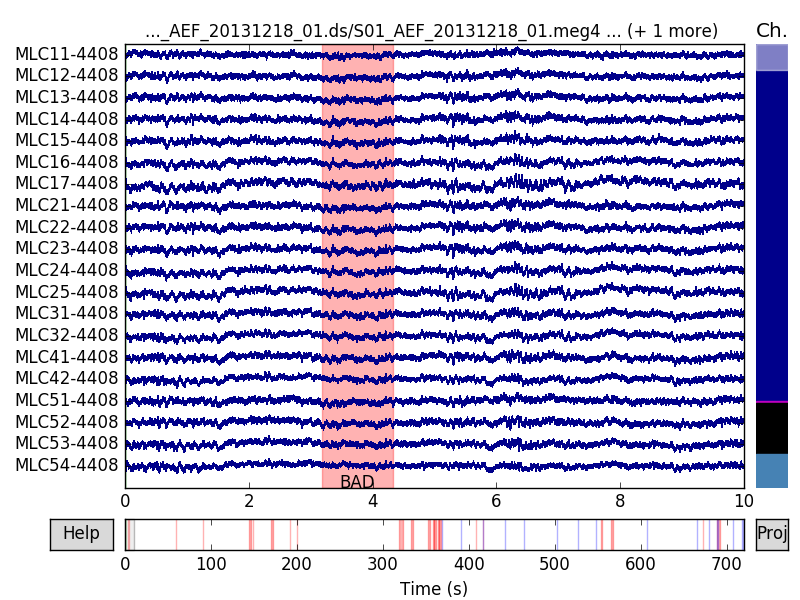

For noise reduction, a set of bad segments have been identified and stored in csv files. The bad segments are later used to reject epochs that overlap with them. The file for the second run also contains some saccades. The saccades are removed by using SSP. We use pandas to read the data from the csv files. You can also view the files with your favorite text editor.

annotations_df = pd.DataFrame()

offset = n_times_run1

for idx in [1, 2]:

csv_fname = op.join(data_path, 'MEG', 'bst_auditory',

'events_bad_0%s.csv' % idx)

df = pd.read_csv(csv_fname, header=None,

names=['onset', 'duration', 'id', 'label'])

print('Events from run {0}:'.format(idx))

print(df)

df['onset'] += offset * (idx - 1)

annotations_df = pd.concat([annotations_df, df], axis=0)

saccades_events = df[df['label'] == 'saccade'].values[:, :3].astype(int)

# Conversion from samples to times:

onsets = annotations_df['onset'].values / raw.info['sfreq']

durations = annotations_df['duration'].values / raw.info['sfreq']

descriptions = annotations_df['label'].values

annotations = mne.Annotations(onsets, durations, descriptions)

raw.annotations = annotations

del onsets, durations, descriptions

Out:

Events from run 1:

onset duration id label

0 7625 2776 1 BAD

1 142459 892 1 BAD

2 216954 460 1 BAD

3 345135 5816 1 BAD

4 357687 1053 1 BAD

5 409101 3736 1 BAD

6 461110 179 1 BAD

7 479866 426 1 BAD

8 764914 11500 1 BAD

9 798174 6589 1 BAD

10 846880 5383 1 BAD

11 858863 5136 1 BAD

Events from run 2:

onset duration id label

0 9 5583 1 BAD

1 9256 3114 1 BAD

2 14287 3456 1 BAD

3 116432 228 1 BAD

4 134489 1329 1 BAD

5 464527 4727 1 BAD

6 494136 4519 1 BAD

7 749288 189 1 BAD

8 788623 7937 1 BAD

9 21179 0 1 saccade

10 72993 0 1 saccade

11 134527 0 1 saccade

12 196555 0 1 saccade

13 249894 0 1 saccade

14 343357 0 1 saccade

15 400771 0 1 saccade

16 450256 0 1 saccade

17 593101 0 1 saccade

18 733942 0 1 saccade

19 765939 0 1 saccade

20 789476 0 1 saccade

21 792852 0 1 saccade

22 833208 0 1 saccade

23 859869 0 1 saccade

24 862888 0 1 saccade

Here we compute the saccade and EOG projectors for magnetometers and add them to the raw data. The projectors are added to both runs.

saccade_epochs = mne.Epochs(raw, saccades_events, 1, 0., 0.5, preload=True,

reject_by_annotation=False)

projs_saccade = mne.compute_proj_epochs(saccade_epochs, n_mag=1, n_eeg=0,

desc_prefix='saccade')

if use_precomputed:

proj_fname = op.join(data_path, 'MEG', 'bst_auditory',

'bst_auditory-eog-proj.fif')

projs_eog = mne.read_proj(proj_fname)[0]

else:

projs_eog, _ = mne.preprocessing.compute_proj_eog(raw.load_data(),

n_mag=1, n_eeg=0)

raw.add_proj(projs_saccade)

raw.add_proj(projs_eog)

del saccade_epochs, saccades_events, projs_eog, projs_saccade # To save memory

Out:

16 matching events found

0 projection items activated

Loading data for 16 events and 1201 original time points ...

1 bad epochs dropped

No gradiometers found. Forcing n_grad to 0

Adding projection: axial-saccade-PCA-01

Read a total of 1 projection items:

EOG-axial-998--0.200-0.200-PCA-01 (1 x 274) idle

1 projection items deactivated

1 projection items deactivated

Visually inspect the effects of projections. Click on ‘proj’ button at the bottom right corner to toggle the projectors on/off. EOG events can be plotted by adding the event list as a keyword argument. As the bad segments and saccades were added as annotations to the raw data, they are plotted as well.

raw.plot(block=True)

Typical preprocessing step is the removal of power line artifact (50 Hz or 60 Hz). Here we notch filter the data at 60, 120 and 180 to remove the original 60 Hz artifact and the harmonics. The power spectra are plotted before and after the filtering to show the effect. The drop after 600 Hz appears because the data was filtered during the acquisition. In memory saving mode we do the filtering at evoked stage, which is not something you usually would do.

if not use_precomputed:

meg_picks = mne.pick_types(raw.info, meg=True, eeg=False)

raw.plot_psd(tmax=np.inf, picks=meg_picks)

notches = np.arange(60, 181, 60)

raw.notch_filter(notches)

raw.plot_psd(tmax=np.inf, picks=meg_picks)

We also lowpass filter the data at 100 Hz to remove the hf components.

if not use_precomputed:

raw.filter(None, 100., h_trans_bandwidth=0.5, filter_length='10s',

phase='zero-double')

Epoching and averaging. First some parameters are defined and events extracted from the stimulus channel (UPPT001). The rejection thresholds are defined as peak-to-peak values and are in T / m for gradiometers, T for magnetometers and V for EOG and EEG channels.

tmin, tmax = -0.1, 0.5

event_id = dict(standard=1, deviant=2)

reject = dict(mag=4e-12, eog=250e-6)

# find events

events = mne.find_events(raw, stim_channel='UPPT001')

Out:

480 events found

Events id: [1 2]

The event timing is adjusted by comparing the trigger times on detected sound onsets on channel UADC001-4408.

sound_data = raw[raw.ch_names.index('UADC001-4408')][0][0]

onsets = np.where(np.abs(sound_data) > 2. * np.std(sound_data))[0]

min_diff = int(0.5 * raw.info['sfreq'])

diffs = np.concatenate([[min_diff + 1], np.diff(onsets)])

onsets = onsets[diffs > min_diff]

assert len(onsets) == len(events)

diffs = 1000. * (events[:, 0] - onsets) / raw.info['sfreq']

print('Trigger delay removed (μ ± σ): %0.1f ± %0.1f ms'

% (np.mean(diffs), np.std(diffs)))

events[:, 0] = onsets

del sound_data, diffs

Out:

Trigger delay removed (μ ± σ): -14.0 ± 0.3 ms

We mark a set of bad channels that seem noisier than others. This can also

be done interactively with raw.plot by clicking the channel name

(or the line). The marked channels are added as bad when the browser window

is closed.

raw.info['bads'] = ['MLO52-4408', 'MRT51-4408', 'MLO42-4408', 'MLO43-4408']

The epochs (trials) are created for MEG channels. First we find the picks

for MEG and EOG channels. Then the epochs are constructed using these picks.

The epochs overlapping with annotated bad segments are also rejected by

default. To turn off rejection by bad segments (as was done earlier with

saccades) you can use keyword reject_by_annotation=False.

picks = mne.pick_types(raw.info, meg=True, eeg=False, stim=False, eog=True,

exclude='bads')

epochs = mne.Epochs(raw, events, event_id, tmin, tmax, picks=picks,

baseline=(None, 0), reject=reject, preload=False,

proj=True)

Out:

480 matching events found

Created an SSP operator (subspace dimension = 2)

2 projection items activated

We only use first 40 good epochs from each run. Since we first drop the bad epochs, the indices of the epochs are no longer same as in the original epochs collection. Investigation of the event timings reveals that first epoch from the second run corresponds to index 182.

epochs.drop_bad()

epochs_standard = mne.concatenate_epochs([epochs['standard'][range(40)],

epochs['standard'][182:222]])

epochs_standard.load_data() # Resampling to save memory.

epochs_standard.resample(600, npad='auto')

epochs_deviant = epochs['deviant'].load_data()

epochs_deviant.resample(600, npad='auto')

del epochs, picks

Out:

Loading data for 480 events and 1441 original time points ...

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on MAG : [u'MLP52-4408']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'HEOG']

Rejecting epoch based on EOG : [u'HEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on MAG : [u'MLP52-4408']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'HEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'HEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'HEOG']

Rejecting epoch based on EOG : [u'HEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'HEOG']

Rejecting epoch based on EOG : [u'VEOG']

Rejecting epoch based on EOG : [u'VEOG']

40 bad epochs dropped

Loading data for 40 events and 1441 original time points ...

Loading data for 40 events and 1441 original time points ...

80 matching events found

Created an SSP operator (subspace dimension = 2)

0 bad epochs dropped

Loading data for 75 events and 1441 original time points ...

The averages for each conditions are computed.

evoked_std = epochs_standard.average()

evoked_dev = epochs_deviant.average()

del epochs_standard, epochs_deviant

Typical preprocessing step is the removal of power line artifact (50 Hz or

60 Hz). Here we notch filter the data at 60, 120 and 180 to remove the

original 60 Hz artifact and the harmonics. Normally this would be done to

raw data (with mne.io.Raw.filter()), but to reduce memory consumption

of this tutorial, we do it at evoked stage.

if use_precomputed:

sfreq = evoked_std.info['sfreq']

notches = [60, 120, 180]

for evoked in (evoked_std, evoked_dev):

evoked.data[:] = notch_filter(evoked.data, sfreq, notches)

evoked.data[:] = filter_data(evoked.data, sfreq, l_freq=None,

h_freq=100.)

Out:

Setting up band-stop filter

Filter length of 7920 samples (13.200 sec) selected

filter_length (7921) is longer than the signal (360), distortion is likely. Reduce filter length or filter a longer signal.

Setting up low-pass filter at 1e+02 Hz

h_trans_bandwidth chosen to be 25.0 Hz

Filter length of 158 samples (0.263 sec) selected

Setting up band-stop filter

Filter length of 7920 samples (13.200 sec) selected

filter_length (7921) is longer than the signal (360), distortion is likely. Reduce filter length or filter a longer signal.

Setting up low-pass filter at 1e+02 Hz

h_trans_bandwidth chosen to be 25.0 Hz

Filter length of 158 samples (0.263 sec) selected

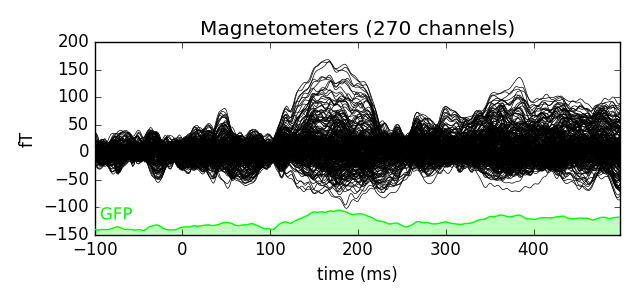

Here we plot the ERF of standard and deviant conditions. In both conditions we can see the P50 and N100 responses. The mismatch negativity is visible only in the deviant condition around 100-200 ms. P200 is also visible around 170 ms in both conditions but much stronger in the standard condition. P300 is visible in deviant condition only (decision making in preparation of the button press). You can view the topographies from a certain time span by painting an area with clicking and holding the left mouse button.

evoked_std.plot(window_title='Standard', gfp=True)

evoked_dev.plot(window_title='Deviant', gfp=True)

Show activations as topography figures.

times = np.arange(0.05, 0.301, 0.025)

evoked_std.plot_topomap(times=times, title='Standard')

evoked_dev.plot_topomap(times=times, title='Deviant')

We can see the MMN effect more clearly by looking at the difference between the two conditions. P50 and N100 are no longer visible, but MMN/P200 and P300 are emphasised.

evoked_difference = combine_evoked([evoked_dev, -evoked_std], weights='equal')

evoked_difference.plot(window_title='Difference', gfp=True)

Source estimation. We compute the noise covariance matrix from the empty room measurement and use it for the other runs.

reject = dict(mag=4e-12)

cov = mne.compute_raw_covariance(raw_erm, reject=reject)

cov.plot(raw_erm.info)

del raw_erm

Out:

Using up to 149 segments

Number of samples used : 71520

[done]

The transformation is read from a file. More information about coregistering

the data, see Interactive analysis with mne_analyze or

mne.gui.coregistration().

trans_fname = op.join(data_path, 'MEG', 'bst_auditory',

'bst_auditory-trans.fif')

trans = mne.read_trans(trans_fname)

To save time and memory, the forward solution is read from a file. Set

use_precomputed=False in the beginning of this script to build the

forward solution from scratch. The head surfaces for constructing a BEM

solution are read from a file. Since the data only contains MEG channels, we

only need the inner skull surface for making the forward solution. For more

information: Cortical surface reconstruction with FreeSurfer, mne.setup_source_space(),

Creating the BEM meshes, mne.bem.make_watershed_bem().

if use_precomputed:

fwd_fname = op.join(data_path, 'MEG', 'bst_auditory',

'bst_auditory-meg-oct-6-fwd.fif')

fwd = mne.read_forward_solution(fwd_fname)

else:

src = mne.setup_source_space(subject, spacing='ico4',

subjects_dir=subjects_dir, overwrite=True)

model = mne.make_bem_model(subject=subject, ico=4, conductivity=[0.3],

subjects_dir=subjects_dir)

bem = mne.make_bem_solution(model)

fwd = mne.make_forward_solution(evoked_std.info, trans=trans, src=src,

bem=bem)

inv = mne.minimum_norm.make_inverse_operator(evoked_std.info, fwd, cov)

snr = 3.0

lambda2 = 1.0 / snr ** 2

del fwd

Out:

Reading forward solution from /home/ubuntu/mne_data/MNE-brainstorm-data/bst_auditory/MEG/bst_auditory/bst_auditory-meg-oct-6-fwd.fif...

Reading a source space...

Computing patch statistics...

Patch information added...

Distance information added...

[done]

Reading a source space...

Computing patch statistics...

Patch information added...

Distance information added...

[done]

2 source spaces read

Desired named matrix (kind = 3523) not available

Read MEG forward solution (8196 sources, 270 channels, free orientations)

Source spaces transformed to the forward solution coordinate frame

Cartesian source orientations...

[done]

Converting to surface-based source orientations...

Average patch normals will be employed in the rotation to the local surface coordinates....

[done]

Computing inverse operator with 270 channels.

Created an SSP operator (subspace dimension = 2)

estimated rank (mag): 268

Setting small MEG eigenvalues to zero.

Not doing PCA for MEG.

Total rank is 268

Creating the depth weighting matrix...

270 magnetometer or axial gradiometer channels

limit = 8033/8196 = 10.015871

scale = 6.10585e-11 exp = 0.8

Computing inverse operator with 270 channels.

Creating the source covariance matrix

Applying loose dipole orientations. Loose value of 0.2.

Whitening the forward solution.

Adjusting source covariance matrix.

Computing SVD of whitened and weighted lead field matrix.

largest singular value = 7.80635

scaling factor to adjust the trace = 2.95615e+19

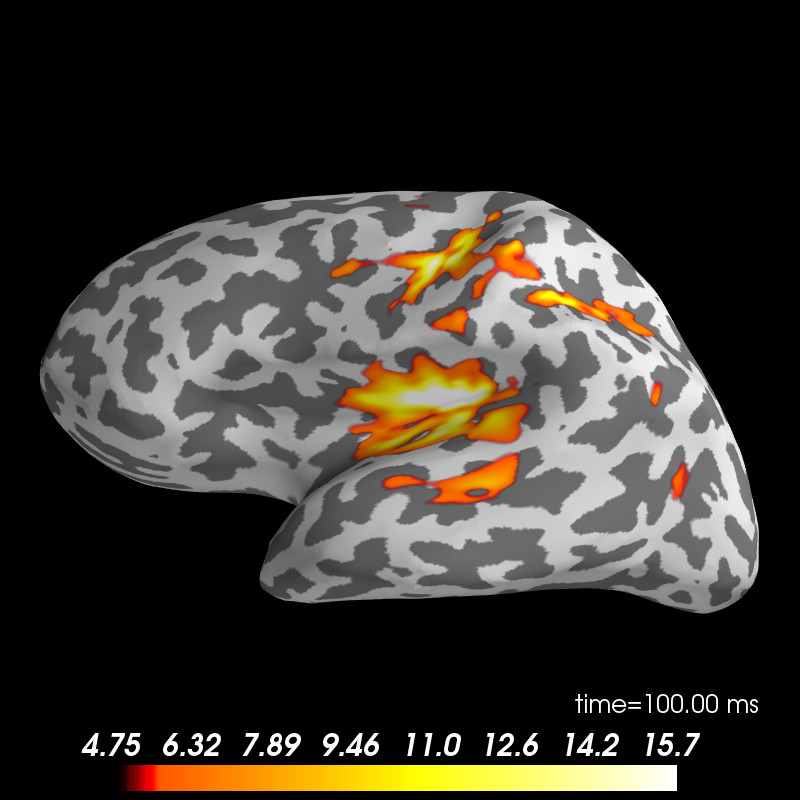

The sources are computed using dSPM method and plotted on an inflated brain

surface. For interactive controls over the image, use keyword

time_viewer=True.

Standard condition.

stc_standard = mne.minimum_norm.apply_inverse(evoked_std, inv, lambda2, 'dSPM')

brain = stc_standard.plot(subjects_dir=subjects_dir, subject=subject,

surface='inflated', time_viewer=False, hemi='lh',

initial_time=0.1, time_unit='s')

del stc_standard, brain

Out:

Preparing the inverse operator for use...

Scaled noise and source covariance from nave = 1 to nave = 80

Created the regularized inverter

Created an SSP operator (subspace dimension = 2)

Created the whitener using a full noise covariance matrix (2 small eigenvalues omitted)

Computing noise-normalization factors (dSPM)...

[done]

Picked 270 channels from the data

Computing inverse...

(eigenleads need to be weighted)...

combining the current components...

(dSPM)...

[done]

Updating smoothing matrix, be patient..

Smoothing matrix creation, step 1

Smoothing matrix creation, step 2

Smoothing matrix creation, step 3

Smoothing matrix creation, step 4

Smoothing matrix creation, step 5

Smoothing matrix creation, step 6

Smoothing matrix creation, step 7

Smoothing matrix creation, step 8

Smoothing matrix creation, step 9

Smoothing matrix creation, step 10

colormap: fmin=4.75e+00 fmid=5.62e+00 fmax=1.57e+01 transparent=1

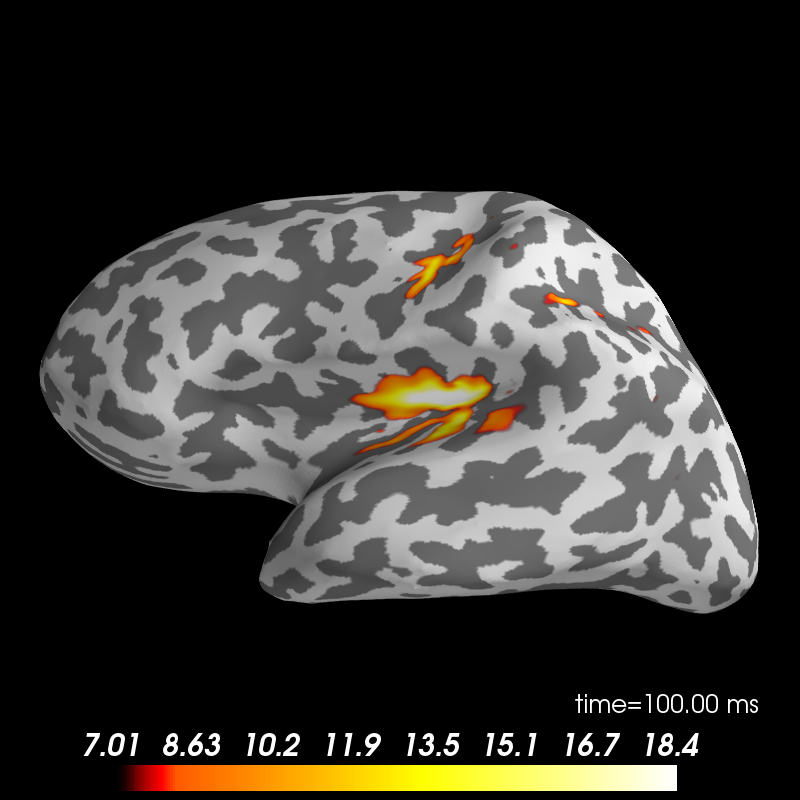

Deviant condition.

stc_deviant = mne.minimum_norm.apply_inverse(evoked_dev, inv, lambda2, 'dSPM')

brain = stc_deviant.plot(subjects_dir=subjects_dir, subject=subject,

surface='inflated', time_viewer=False, hemi='lh',

initial_time=0.1, time_unit='s')

del stc_deviant, brain

Out:

Preparing the inverse operator for use...

Scaled noise and source covariance from nave = 1 to nave = 75

Created the regularized inverter

Created an SSP operator (subspace dimension = 2)

Created the whitener using a full noise covariance matrix (2 small eigenvalues omitted)

Computing noise-normalization factors (dSPM)...

[done]

Picked 270 channels from the data

Computing inverse...

(eigenleads need to be weighted)...

combining the current components...

(dSPM)...

[done]

Updating smoothing matrix, be patient..

Smoothing matrix creation, step 1

Smoothing matrix creation, step 2

Smoothing matrix creation, step 3

Smoothing matrix creation, step 4

Smoothing matrix creation, step 5

Smoothing matrix creation, step 6

Smoothing matrix creation, step 7

Smoothing matrix creation, step 8

Smoothing matrix creation, step 9

Smoothing matrix creation, step 10

colormap: fmin=7.01e+00 fmid=8.16e+00 fmax=1.84e+01 transparent=1

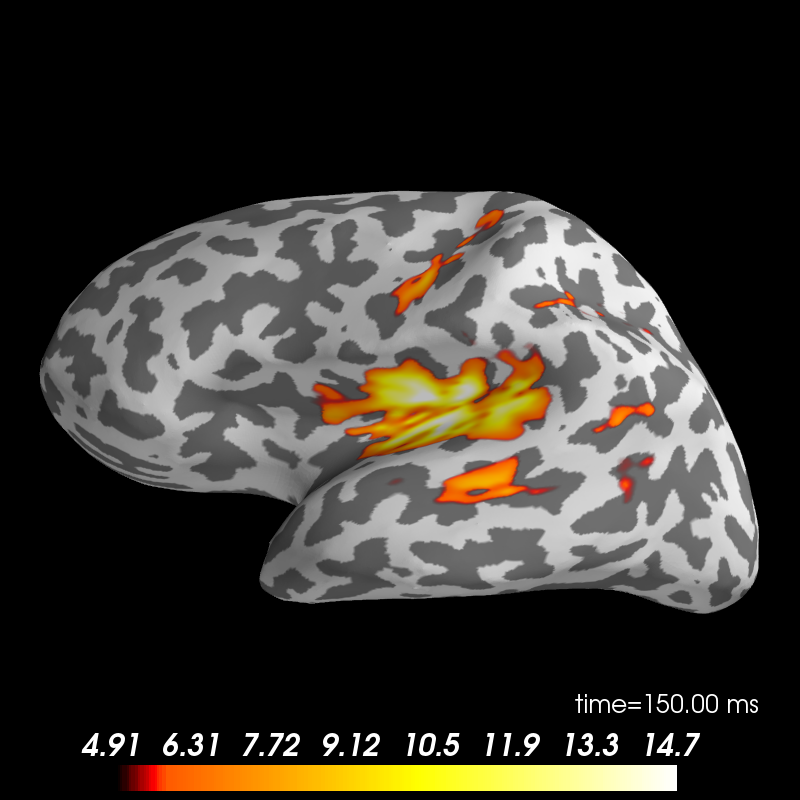

Difference.

stc_difference = apply_inverse(evoked_difference, inv, lambda2, 'dSPM')

brain = stc_difference.plot(subjects_dir=subjects_dir, subject=subject,

surface='inflated', time_viewer=False, hemi='lh',

initial_time=0.15, time_unit='s')

Out:

Preparing the inverse operator for use...

Scaled noise and source covariance from nave = 1 to nave = 155

Created the regularized inverter

Created an SSP operator (subspace dimension = 2)

Created the whitener using a full noise covariance matrix (2 small eigenvalues omitted)

Computing noise-normalization factors (dSPM)...

[done]

Picked 270 channels from the data

Computing inverse...

(eigenleads need to be weighted)...

combining the current components...

(dSPM)...

[done]

Updating smoothing matrix, be patient..

Smoothing matrix creation, step 1

Smoothing matrix creation, step 2

Smoothing matrix creation, step 3

Smoothing matrix creation, step 4

Smoothing matrix creation, step 5

Smoothing matrix creation, step 6

Smoothing matrix creation, step 7

Smoothing matrix creation, step 8

Smoothing matrix creation, step 9

Smoothing matrix creation, step 10

colormap: fmin=4.91e+00 fmid=5.74e+00 fmax=1.47e+01 transparent=1

Total running time of the script: ( 1 minutes 27.351 seconds)